AI Image Generation Fails: Common Causes and Mistakes

Explore why some AI image generation fails, from anatomical errors to bad text prompts. Learn about common mistakes, like data limitations and poor AI training.

GENERATIVE AI

Robin Lamott

8/9/202510 min read

Introduction

Artificial Intelligence (AI) has transformed countless industries, from healthcare to entertainment, by enabling machines to perform tasks that once required human intelligence. One of the most exciting applications of AI is in image generation, where models like DALL·E, Stable Diffusion, and MidJourney create stunning visuals from text prompts. These systems can produce photorealistic landscapes, imaginative surreal art, or even mimic the styles of famous artists. However, despite their remarkable capabilities, AI-generated images are not always perfect. Sometimes, the results are hilariously off-target, disturbingly distorted, or simply fail to meet basic quality standards. These "AI fails" have become a topic of fascination, sparking discussions about the limitations of current AI systems and the challenges of achieving perfection in generative tasks.

This article explores the phenomenon of AI fails in image generation, delving into why these failures occur, the most common mistakes, and the technical, ethical, and practical implications. By examining the underlying mechanisms, data limitations, and human-AI interaction challenges, we aim to provide a comprehensive understanding of why AI doesn’t always deliver flawless results and what this means for the future of generative AI.

What Are AI Fails in Image Generation?

An AI failure in image generation occurs when the output does not align with the user’s expectations or fails to meet basic quality standards. These failures can range from minor errors, like slightly distorted objects, to major blunders, such as unrecognizable forms or images that defy logic. For example, a user might prompt an AI to generate "a cat sitting on a chair," only to receive an image of a cat with six legs, a chair floating in mid-air, or a bizarre hybrid of the two. These errors are not only amusing but also reveal the complexities of training and deploying AI models for creative tasks.

AI fails are often shared on social media platforms like X, where users post bizarre or humorous outputs to highlight the quirks of generative models. These failures are more than just entertainment; they offer insights into the limitations of AI and the challenges of aligning machine outputs with human expectations.

Why Do AI Failures Happen?

To understand why AI failures occur, we need to explore the technical foundations of image generation models, the data they rely on, and the complexities of interpreting human prompts. Below, we break down the primary reasons for these failures.

1. Limitations of Training Data

AI models for image generation, such as Generative Adversarial Networks (GANs) or diffusion models, are trained on massive datasets of images and associated text. These datasets, like LAION-5B or Common Crawl, contain billions of images scraped from the internet. While vast, these datasets are not perfect. Common issues include:

Bias and Imbalance: Training datasets often reflect the biases of the internet. For example, if a dataset contains more images of cats than rare animals like pangolins, the AI may struggle to generate accurate depictions of the latter. Similarly, cultural or stylistic biases can lead to skewed outputs, such as generating stereotypical representations of certain groups or objects.

Low-Quality or Noisy Data: Internet-scraped datasets often include low-resolution images, mislabeled content, or irrelevant data. If an AI is trained on blurry or poorly tagged images, it may produce similarly low-quality or incorrect outputs.

Limited Representation of Edge Cases: Rare or complex scenarios (e.g., "a steampunk submarine in a desert") may not be well-represented in the training data, leading to outputs that are either generic or nonsensical.

For instance, if a user requests an image of a "futuristic city with flying cars," but the training data lacks clear examples of such scenes, the AI might generate a generic cityscape with floating blobs that vaguely resemble cars. This highlights a core limitation: AI models are only as good as the data they’re trained on.

2. Complexity of Text-to-Image Translation

Image generation models rely on understanding and interpreting text prompts, which is a challenging task. Natural language is inherently ambiguous, and human expectations for visual output can vary widely. Several factors contribute to failures in this process:

Ambiguity in Prompts: A prompt like "a dog in a hat" could mean a dog wearing a hat, a dog sitting next to a hat, or even a hat shaped like a dog. Without clear context, the AI may misinterpret the user’s intent.

Complex or Contradictory Prompts: Prompts that combine multiple concepts, such as "a medieval knight riding a dragon in a cyberpunk city," can confuse the model, especially if it hasn’t seen similar combinations during training. The AI might prioritize one element (e.g., the knight) while neglecting others (e.g., the cyberpunk aesthetic).

Lack of Common Sense: AI models lack human-like common sense. For example, a prompt for "a person eating spaghetti with a spoon" might result in an image where the person is holding the spoon upside down or the spaghetti is floating, as the model doesn’t inherently understand the mechanics of eating.

These issues are compounded by the fact that text-to-image models must map abstract language to concrete visual elements, a process that requires balancing creativity with precision.

3. Architectural Limitations of AI Models

The architecture of generative AI models, while sophisticated, has inherent limitations that contribute to failures. Key issues include:

Overfitting or Underfitting: If a model is overfitted to its training data, it may produce repetitive or overly specific outputs. Conversely, underfitting can lead to generic or incoherent images. Balancing these extremes is a persistent challenge.

Latent Space Constraints: Models like diffusion models operate in a latent space, a compressed representation of visual data. If certain concepts or styles are poorly represented in this space, the AI may struggle to generate accurate images for those prompts.

Resolution and Detail Limitations: Generating high-resolution images with fine details is computationally intensive. Some models sacrifice detail for speed, resulting in blurry or incomplete outputs, especially for complex scenes.

For example, GAN-based models are notorious for producing artifacts like distorted faces or unnatural textures when pushed beyond their capabilities. Diffusion models, while more robust, can still generate inconsistencies, such as mismatched lighting or proportions.

4. Stochastic Nature of Generative Models

Generative AI models are inherently probabilistic, meaning they sample from a range of possible outputs rather than producing a single deterministic result. This stochasticity can lead to variability in quality. For instance:

Random Sampling Errors: When generating an image, the model samples from a probability distribution in its latent space. A poor sample can result in an image that deviates significantly from the desired output.

Sensitivity to Parameters: Parameters like temperature (which controls randomness) or guidance scale (which balances adherence to the prompt) can drastically affect the output. Incorrect settings may lead to images that are too chaotic or overly rigid.

This randomness is both a strength and a weakness: it allows for creative diversity but also increases the likelihood of generating flawed or unexpected results.

5. Human-AI Interaction Challenges

The interaction between users and AI systems plays a significant role in the quality of outputs. Common issues include:

Poorly Crafted Prompts: Novice users may not know how to write effective prompts, leading to vague or overly complex requests that confuse the model. For example, a prompt like "make something cool" is too ambiguous to produce a coherent result.

Expectation Mismatch: Users may have unrealistic expectations about what AI can achieve. For instance, expecting a model to perfectly replicate a specific artwork or generate a hyper-detailed scene with limited computational resources can lead to disappointment.

Lack of Feedback Loops: Unlike human artists, AI models don’t receive real-time feedback during the generation process. If a user doesn’t like the output, they must start over with a new prompt, which can be trial-and-error intensive.

Prompt engineering, the art of crafting precise and detailed prompts, has emerged as a skill to mitigate these issues, but it remains inaccessible to casual users.

Common Types of AI Fails in Image Generation

AI failures occur in various ways, each revealing different aspects of the model’s limitations. Below are the most common types of errors observed in AI-generated images.

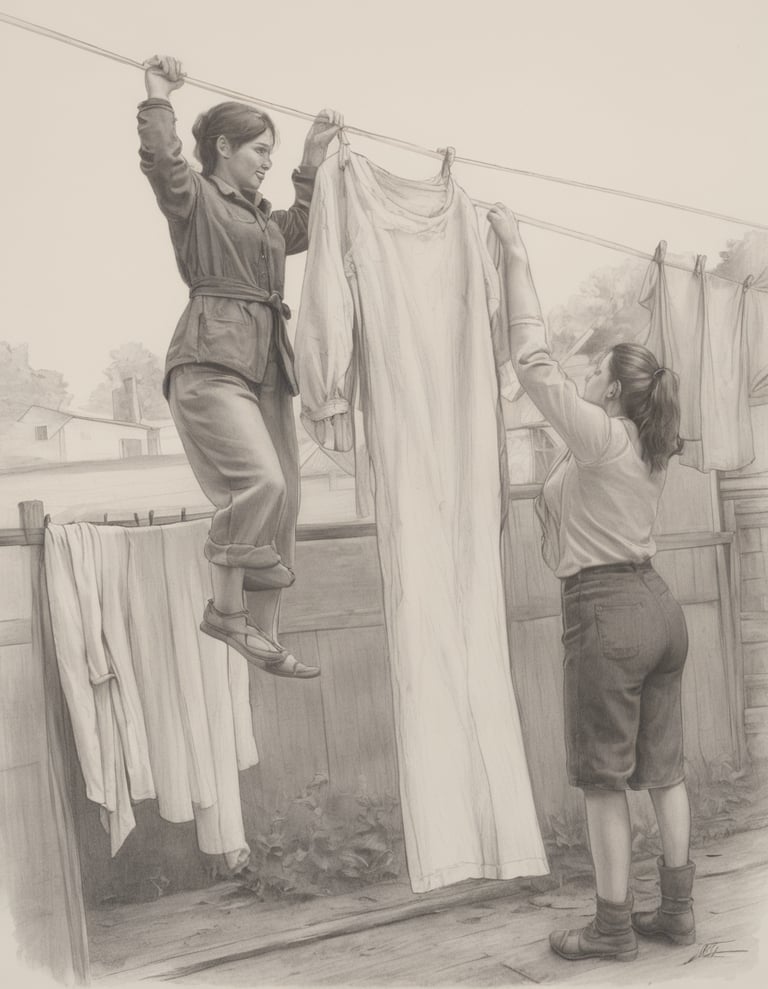

1. Anatomical Errors

One of the most noticeable AI fails is anatomical inaccuracies, particularly in humans and animals. Examples include:

Extra Limbs or Fingers: AI often struggles with counting fingers or limbs, leading to humans with six fingers or animals with extra legs.

Distorted Faces: Facial features may be misaligned, with eyes in unnatural positions or mouths that appear melted.

Unnatural Proportions: Bodies may have disproportionate arms, legs, or heads, creating a uncanny valley effect.

These errors often stem from insufficient training data for specific poses or angles, as well as challenges in modeling complex 3D structures in 2D images.

2. Object Inconsistencies

Objects in AI-generated images can appear illogical or physically impossible. Common issues include:

Floating or Misplaced Objects: A chair might float above the ground, or a car might have wheels on its roof.

Mismatched Scales: Objects may have incorrect sizes relative to each other, such as a tiny person next to a giant apple.

Blended Objects: The AI may fuse unrelated objects, like a tree with car-like features or a book with animal characteristics.

These errors often occur when the model struggles to disentangle different concepts in the latent space or misinterprets the relationships between objects in the prompt.

3. Text Rendering Issues

Generating coherent text within images, such as signs or labels, is a notorious challenge for AI. Common failures include:

Garbled Text: Words may appear as random strings of characters or distorted symbols.

Incorrect Fonts or Styles: Text may not match the style of the scene, such as a neon sign in a medieval setting.

Misspelled Words: The AI may generate text with spelling errors or nonsensical phrases.

Text rendering is difficult because most image generation models are not explicitly trained on text data, and their architectures prioritize visual patterns over linguistic accuracy.

4. Stylistic Inconsistencies

When users request images in specific artistic styles (e.g., "a landscape in the style of Van Gogh"), the AI may fail to maintain consistency. Common issues include:

Mixed Styles: The image might blend elements of multiple styles, such as a photorealistic face with cartoonish backgrounds.

Incomplete Style Application: The style may only apply to parts of the image, leaving other areas looking generic or out of place.

Lack of Cohesion: The image may lack a unified aesthetic, with clashing colors or textures.

These failures often result from limited training data for specific styles or difficulties in generalizing stylistic features across diverse prompts.

5. Logical or Contextual Errors

AI-generated images sometimes defy logic or context, producing surreal or absurd results. Examples include:

Impossible Physics: Objects may violate physical laws, such as water flowing upward or shadows cast in the wrong direction.

Inappropriate Context: A prompt for "a chef in a kitchen" might result in a chef cooking in a forest.

Cultural Missteps: The AI may produce culturally insensitive or inaccurate depictions, such as traditional clothing from the wrong region.

These errors highlight the AI’s lack of real-world knowledge and its reliance on statistical patterns rather than reasoning.

6. Artifacts and Noise

Low-level technical issues can also degrade image quality. Common artifacts include:

Blurriness: Parts of the image may appear out of focus, especially in high-resolution outputs.

Visual Noise: Random patterns or graininess may appear, particularly in complex scenes.

Seams or Distortions: Visible seams or warping may occur where the model struggles to stitch together different parts of the image.

These issues are often tied to the model’s resolution limits or the computational constraints of the generation process.

Case Studies of Notable AI Fails

To illustrate the scope of AI failures, let’s examine a few real-world examples that have gained attention on platforms like X:

The Six-Fingered Hand: A user prompted an AI to generate "a realistic portrait of a person holding a book." The result was a photorealistic human with six fingers on one hand, which went viral for its eerie realism combined with an obvious error. This failure likely stemmed from the model’s difficulty in modeling hand anatomy, a common challenge due to the variability of hand poses in training data.

The Floating Dog: A prompt for "a dog on a beach" produced an image of a dog seemingly levitating above the sand, with no shadow or contact with the ground. This error highlights the AI’s struggle with spatial relationships and physics.

The Nonsensical Sign: An image of a city street included a sign with gibberish text, like "Xzqpl Wrtgh." This fail underscores the limitations of text rendering in current models, as the AI prioritized visual aesthetics over linguistic accuracy.

These cases demonstrate how AI failures can range from amusing to unsettling, often reflecting deeper technical challenges.

Implications of AI Fails

AI failures in image generation have broader implications for both users and developers. Understanding these implications is crucial for improving AI systems and managing expectations.

1. User Trust and Adoption

Frequent or severe AI failures can erode user trust in generative technologies. If users consistently receive poor-quality outputs, they may be less likely to rely on AI for creative or professional tasks. Developers must balance showcasing AI’s capabilities with transparency about its limitations to maintain user confidence.

2. Ethical Concerns

Some AI failures raise ethical questions, particularly when they involve biased or offensive outputs. For example, generating stereotypical or culturally insensitive images can perpetuate harmful narratives. Developers must address these issues through better data curation and model oversight to ensure responsible AI use.

3. Opportunities for Improvement

AI failures can provide valuable feedback for developers. By analyzing common errors, researchers can identify weaknesses in training data, model architectures, or user interfaces. For instance, anatomical errors might prompt improvements in 3D modeling techniques, while text rendering issues could lead to specialized training for text generation.

4. Creative Potential

Paradoxically, AI failures can also inspire creativity. Many artists and designers embrace the quirks of AI-generated images, using them as starting points for unique artworks. The surreal or unexpected nature of some fails can spark new ideas that a perfectly accurate model might not produce.

Strategies to Mitigate AI Fails

While AI failures are unlikely to disappear entirely, several strategies can reduce their frequency and severity:

Improved Training Data: Curating higher-quality, more diverse datasets can help models better understand rare or complex concepts. Techniques like data augmentation or synthetic data generation can also fill gaps in existing datasets.

Advanced Model Architectures: Innovations in model design, such as hybrid GAN-diffusion models or attention mechanisms tailored for specific tasks (e.g., text rendering), can improve output quality.

Better Prompt Engineering: Educating users on how to write clear, specific prompts can reduce ambiguity and improve results. Tools like prompt suggestion systems or interactive interfaces can also help.

Post-Processing and Validation: Applying post-processing techniques, such as artifact removal or anatomical correction, can enhance image quality. Validation checks could flag obvious errors before presenting the output to users.

User Feedback Integration: Allowing users to provide feedback on failed outputs can help developers fine-tune models and prioritize areas for improvement.

Hybrid Human-AI Workflows: Combining AI generation with human editing can mitigate failures. For example, an AI-generated image with minor errors can be refined by a human artist using tools like Photoshop.

The Future of AI Image Generation

The field of AI image generation is rapidly evolving, and many of the current limitations are likely to be addressed in the coming years. Advances in areas like few-shot learning, where models can generalize from limited examples, and multimodal AI, which integrates text, images, and other data, promise to reduce failures. Additionally, as computational power increases, models will be able to generate higher-resolution images with greater detail and accuracy.

However, perfection may remain elusive. The inherent complexity of human creativity, combined with the probabilistic nature of generative models, means that some degree of unpredictability will persist. Rather than viewing AI fails as flaws, we can see them as opportunities to learn, innovate, and even find humor in the imperfections of machine intelligence.

Conclusion

AI failures in image generation are a fascinating window into the strengths and limitations of current AI systems. From anatomical errors to nonsensical text, these failures arise from a combination of data limitations, architectural constraints, and the challenges of translating human intent into visual output. While they can be frustrating, they also offer valuable lessons for improving AI models and fostering collaboration between humans and machines. As the technology advances, we can expect fewer fails, but the quirks and surprises of AI-generated art will likely remain a source of amusement and inspiration for years to come.

By understanding why AI failures occur and how to mitigate them, we can better harness the potential of generative AI while appreciating its imperfections. Whether you’re a developer, artist, or casual user, the journey of exploring AI’s creative boundaries is as enlightening as it is entertaining.

© 2025. All rights reserved.